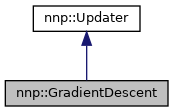

Weight updates based on simple gradient descent methods. More...

#include <GradientDescent.h>

Public Types | |

| enum | DescentType { DT_FIXED , DT_ADAM } |

| Enumerate different gradient descent variants. More... | |

Public Member Functions | |

| GradientDescent (std::size_t const sizeState, DescentType const type) | |

| GradientDescent class constructor. | |

| virtual | ~GradientDescent () |

| Destructor. | |

| void | setState (double *state) |

| Set pointer to current state. | |

| void | setError (double const *const error, std::size_t const size=1) |

| Set pointer to current error vector. | |

| void | setJacobian (double const *const jacobian, std::size_t const columns=1) |

| Set pointer to current Jacobi matrix. | |

| void | update () |

| Perform connection update. | |

| void | setParametersFixed (double const eta) |

| Set parameters for fixed step gradient descent algorithm. | |

| void | setParametersAdam (double const eta, double const beta1, double const beta2, double const epsilon) |

| Set parameters for Adam algorithm. | |

| std::string | status (std::size_t epoch) const |

| Status report. | |

| std::vector< std::string > | statusHeader () const |

| Header for status report file. | |

| std::vector< std::string > | info () const |

| Information about gradient descent settings. | |

Public Member Functions inherited from nnp::Updater Public Member Functions inherited from nnp::Updater | |

| virtual void | setupTiming (std::string const &prefix="upd") |

| Activate detailed timing. | |

| virtual void | resetTimingLoop () |

| Start a new timing loop (e.g. | |

| virtual std::map< std::string, Stopwatch > | getTiming () const |

| Return timings gathered in stopwatch map. | |

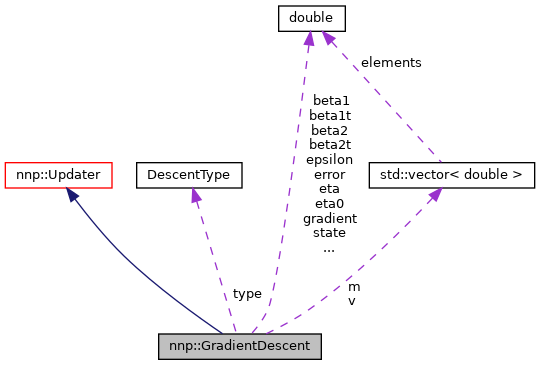

Private Attributes | |

| DescentType | type |

| double | eta |

| Learning rate \(\eta\). | |

| double | beta1 |

| Decay rate 1 (Adam). | |

| double | beta2 |

| Decay rate 2 (Adam). | |

| double | epsilon |

| Small scalar. | |

| double | eta0 |

| Initial learning rate. | |

| double | beta1t |

| Decay rate 1 to the power of t (Adam). | |

| double | beta2t |

| Decay rate 2 to the power of t (Adam). | |

| double * | state |

| State vector pointer. | |

| double const * | error |

| Error pointer (single double value). | |

| double const * | gradient |

| Gradient vector pointer. | |

| std::vector< double > | m |

| First moment estimate (Adam). | |

| std::vector< double > | v |

| Second moment estimate (Adam). | |

Additional Inherited Members | |

Protected Member Functions inherited from nnp::Updater Protected Member Functions inherited from nnp::Updater | |

| Updater (std::size_t const sizeState) | |

| Constructor. | |

Protected Attributes inherited from nnp::Updater Protected Attributes inherited from nnp::Updater | |

| bool | timing |

| Whether detailed timing is enabled. | |

| bool | timingReset |

| Internal loop timer reset switch. | |

| std::size_t | sizeState |

| Number of neural network connections (weights + biases). | |

| std::string | prefix |

| Prefix for timing stopwatches. | |

| std::map< std::string, Stopwatch > | sw |

| Stopwatch map for timing. | |

Detailed Description

Weight updates based on simple gradient descent methods.

Definition at line 29 of file GradientDescent.h.

Member Enumeration Documentation

◆ DescentType

Enumerate different gradient descent variants.

| Enumerator | |

|---|---|

| DT_FIXED | Fixed step size. |

| DT_ADAM | Adaptive moment estimation (Adam). |

Definition at line 33 of file GradientDescent.h.

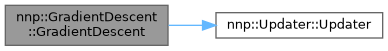

Constructor & Destructor Documentation

◆ GradientDescent()

| GradientDescent::GradientDescent | ( | std::size_t const | sizeState, |

| DescentType const | type ) |

GradientDescent class constructor.

- Parameters

-

[in] sizeState Number of neural network connections (weights and biases). [in] type Descent type used for step size.

Definition at line 25 of file GradientDescent.cpp.

References beta1, beta1t, beta2, beta2t, DT_ADAM, DT_FIXED, epsilon, error, eta, gradient, m, nnp::Updater::sizeState, state, type, nnp::Updater::Updater(), and v.

◆ ~GradientDescent()

|

inlinevirtual |

Member Function Documentation

◆ setState()

|

virtual |

Set pointer to current state.

- Parameters

-

[in,out] state Pointer to state vector (weights vector), will be changed in-place upon calling update().

Implements nnp::Updater.

Definition at line 58 of file GradientDescent.cpp.

References state.

◆ setError()

|

virtual |

Set pointer to current error vector.

- Parameters

-

[in] error Pointer to error (difference between reference and neural network potential output). [in] size Number of error vector entries.

Implements nnp::Updater.

Definition at line 65 of file GradientDescent.cpp.

References error.

◆ setJacobian()

|

virtual |

Set pointer to current Jacobi matrix.

- Parameters

-

[in] jacobian Derivatives of error with respect to weights. [in] columns Number of gradients provided.

- Note

- If there are \(m\) errors and \(n\) weights, the Jacobi matrix is a \(n \times m\) matrix stored in column-major order.

Implements nnp::Updater.

Definition at line 73 of file GradientDescent.cpp.

References gradient.

◆ update()

|

virtual |

Perform connection update.

Update the connections via steepest descent method.

Implements nnp::Updater.

Definition at line 81 of file GradientDescent.cpp.

References beta1, beta1t, beta2, beta2t, DT_ADAM, DT_FIXED, epsilon, eta, eta0, gradient, m, nnp::Updater::sizeState, state, type, and v.

◆ setParametersFixed()

| void GradientDescent::setParametersFixed | ( | double const | eta | ) |

Set parameters for fixed step gradient descent algorithm.

- Parameters

-

[in] eta Step size = ratio of gradient subtracted from current weights.

Definition at line 120 of file GradientDescent.cpp.

References eta.

Referenced by nnp::Training::setupTraining().

◆ setParametersAdam()

| void GradientDescent::setParametersAdam | ( | double const | eta, |

| double const | beta1, | ||

| double const | beta2, | ||

| double const | epsilon ) |

Set parameters for Adam algorithm.

- Parameters

-

[in] eta Step size (corresponds to \(\alpha\) in Adam publication). [in] beta1 Decay rate 1 (first moment). [in] beta2 Decay rate 2 (second moment). [in] epsilon Small scalar.

Definition at line 127 of file GradientDescent.cpp.

References beta1, beta1t, beta2, beta2t, epsilon, eta, and eta0.

Referenced by nnp::Training::setupTraining().

◆ status()

|

virtual |

Status report.

- Parameters

-

[in] epoch Current epoch.

- Returns

- Line with current status information.

Implements nnp::Updater.

Definition at line 144 of file GradientDescent.cpp.

References beta1t, beta2t, DT_ADAM, eta, m, nnp::Updater::sizeState, nnp::strpr(), type, and v.

◆ statusHeader()

|

virtual |

Header for status report file.

- Returns

- Vector with header lines.

Implements nnp::Updater.

Definition at line 167 of file GradientDescent.cpp.

References nnp::createFileHeader(), DT_ADAM, and type.

◆ info()

|

virtual |

Information about gradient descent settings.

- Returns

- Vector with info lines.

Implements nnp::Updater.

Definition at line 202 of file GradientDescent.cpp.

References beta1, beta2, DT_ADAM, DT_FIXED, epsilon, eta, nnp::Updater::sizeState, nnp::strpr(), type, and v.

Member Data Documentation

◆ type

|

private |

Definition at line 118 of file GradientDescent.h.

Referenced by GradientDescent(), info(), status(), statusHeader(), and update().

◆ eta

|

private |

Learning rate \(\eta\).

Definition at line 120 of file GradientDescent.h.

Referenced by GradientDescent(), info(), setParametersAdam(), setParametersFixed(), status(), and update().

◆ beta1

|

private |

Decay rate 1 (Adam).

Definition at line 122 of file GradientDescent.h.

Referenced by GradientDescent(), info(), setParametersAdam(), and update().

◆ beta2

|

private |

Decay rate 2 (Adam).

Definition at line 124 of file GradientDescent.h.

Referenced by GradientDescent(), info(), setParametersAdam(), and update().

◆ epsilon

|

private |

Small scalar.

Definition at line 126 of file GradientDescent.h.

Referenced by GradientDescent(), info(), setParametersAdam(), and update().

◆ eta0

|

private |

Initial learning rate.

Definition at line 128 of file GradientDescent.h.

Referenced by setParametersAdam(), and update().

◆ beta1t

|

private |

Decay rate 1 to the power of t (Adam).

Definition at line 130 of file GradientDescent.h.

Referenced by GradientDescent(), setParametersAdam(), status(), and update().

◆ beta2t

|

private |

Decay rate 2 to the power of t (Adam).

Definition at line 132 of file GradientDescent.h.

Referenced by GradientDescent(), setParametersAdam(), status(), and update().

◆ state

|

private |

State vector pointer.

Definition at line 134 of file GradientDescent.h.

Referenced by GradientDescent(), setState(), and update().

◆ error

|

private |

Error pointer (single double value).

Definition at line 136 of file GradientDescent.h.

Referenced by GradientDescent(), and setError().

◆ gradient

|

private |

Gradient vector pointer.

Definition at line 138 of file GradientDescent.h.

Referenced by GradientDescent(), setJacobian(), and update().

◆ m

|

private |

First moment estimate (Adam).

Definition at line 140 of file GradientDescent.h.

Referenced by GradientDescent(), status(), and update().

◆ v

|

private |

Second moment estimate (Adam).

Definition at line 142 of file GradientDescent.h.

Referenced by GradientDescent(), info(), status(), and update().

The documentation for this class was generated from the following files:

- /home/runner/work/n2p2/n2p2/src/libnnptrain/GradientDescent.h

- /home/runner/work/n2p2/n2p2/src/libnnptrain/GradientDescent.cpp