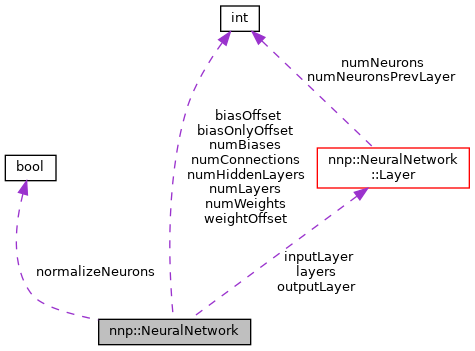

This class implements a feed-forward neural network. More...

#include <NeuralNetwork.h>

Classes | |

| struct | Layer |

| One neural network layer. More... | |

| struct | Neuron |

| A single neuron. More... | |

Public Types | |

| enum | ActivationFunction { AF_UNSET , AF_IDENTITY , AF_TANH , AF_LOGISTIC , AF_SOFTPLUS , AF_RELU , AF_GAUSSIAN , AF_COS , AF_REVLOGISTIC , AF_EXP , AF_HARMONIC } |

| List of available activation function types. More... | |

| enum | ModificationScheme { MS_ZEROBIAS , MS_ZEROOUTPUTWEIGHTS , MS_FANIN , MS_GLOROTBENGIO , MS_NGUYENWIDROW , MS_PRECONDITIONOUTPUT } |

| List of available connection modification schemes. More... | |

Public Member Functions | |

| NeuralNetwork (int numLayers, int const *const &numNeuronsPerLayer, ActivationFunction const *const &activationFunctionsPerLayer) | |

| Neural network class constructor. | |

| ~NeuralNetwork () | |

| void | setNormalizeNeurons (bool normalizeNeurons) |

| Turn on/off neuron normalization. | |

| int | getNumNeurons () const |

| Return total number of neurons. | |

| int | getNumConnections () const |

| Return total number of connections. | |

| int | getNumWeights () const |

| Return number of weights. | |

| int | getNumBiases () const |

| Return number of biases. | |

| void | setConnections (double const *const &connections) |

| Set neural network weights and biases. | |

| void | getConnections (double *connections) const |

| Get neural network weights and biases. | |

| void | initializeConnectionsRandomUniform (unsigned int seed) |

| Initialize connections with random numbers. | |

| void | modifyConnections (ModificationScheme modificationScheme) |

| Change connections according to a given modification scheme. | |

| void | modifyConnections (ModificationScheme modificationScheme, double parameter1, double parameter2) |

| Change connections according to a given modification scheme. | |

| void | setInput (double const *const &input) const |

| Set neural network input layer node values. | |

| void | setInput (std::size_t const index, double const value) const |

| Set neural network input layer node values. | |

| void | getOutput (double *output) const |

| Get neural network output layer node values. | |

| void | propagate () |

| Propagate input information through all layers. | |

| void | calculateDEdG (double *dEdG) const |

| Calculate derivative of output neuron with respect to input neurons. | |

| void | calculateDEdc (double *dEdc) const |

| Calculate derivative of output neuron with respect to connections. | |

| void | calculateDFdc (double *dFdc, double const *const &dGdxyz) const |

| Calculate "second" derivative of output with respect to connections. | |

| void | writeConnections (std::ofstream &file) const |

| Write connections to file. | |

| void | getNeuronStatistics (long *count, double *min, double *max, double *sum, double *sum2) const |

| Return gathered neuron statistics. | |

| void | resetNeuronStatistics () |

| Reset neuron statistics. | |

| long | getMemoryUsage () |

| std::vector< std::string > | info () const |

| Print neural network architecture. | |

Private Member Functions | |

| void | calculateDEdb (double *dEdb) const |

| Calculate derivative of output neuron with respect to biases. | |

| void | calculateDxdG (int index) const |

| Calculate derivative of neuron values before activation function with respect to input neuron. | |

| void | calculateD2EdGdc (int index, double const *const &dEdb, double *d2EdGdc) const |

| Calculate second derivative of output neuron with respect to input neuron and connections. | |

| void | allocateLayer (Layer &layer, int numNeuronsPrevLayer, int numNeurons, ActivationFunction activationFunction) |

| Allocate a single layer. | |

| void | propagateLayer (Layer &layer, Layer &layerPrev) |

| Propagate information from one layer to the next. | |

Private Attributes | |

| bool | normalizeNeurons |

| If neurons are normalized. | |

| int | numWeights |

| Number of NN weights only. | |

| int | numBiases |

| Number of NN biases only. | |

| int | numConnections |

| Number of NN connections (weights + biases). | |

| int | numLayers |

| Total number of layers (includes input and output layers). | |

| int | numHiddenLayers |

| Number of hidden layers. | |

| int * | weightOffset |

| Offset adress of weights per layer in combined weights+bias array. | |

| int * | biasOffset |

| Offset adress of biases per layer in combined weights+bias array. | |

| int * | biasOnlyOffset |

| Offset adress of biases per layer in bias only array. | |

| Layer * | inputLayer |

| Pointer to input layer. | |

| Layer * | outputLayer |

| Pointer to output layer. | |

| Layer * | layers |

| Neural network layers. | |

Detailed Description

This class implements a feed-forward neural network.

Definition at line 28 of file NeuralNetwork.h.

Member Enumeration Documentation

◆ ActivationFunction

List of available activation function types.

Definition at line 32 of file NeuralNetwork.h.

◆ ModificationScheme

List of available connection modification schemes.

| Enumerator | |

|---|---|

| MS_ZEROBIAS | Set all bias values to zero. |

| MS_ZEROOUTPUTWEIGHTS | Set all weights connecting to the output layer to zero. |

| MS_FANIN | Normalize weights via number of neuron inputs (fan-in). If initial weights are uniformly distributed in \(\left[-1, 1\right]\) they will be scaled to be in \(\left[\frac{-1}{\sqrt{n_\text{in}}}, \frac{1}{\sqrt{n_\text{in}}}\right]\), where \(n_\text{in}\) is the number of incoming weights of a neuron (if activation function is of type AF_TANH). |

| MS_GLOROTBENGIO | Normalize connections according to Glorot and Bengio. If initial weights are uniformly distributed in \(\left[-1, 1\right]\) they will be scaled to be in \(\left[-\sqrt{\frac{6}{n_\text{in} + n_\text{out}}}, \sqrt{\frac{6}{n_\text{in} + n_\text{out}}}\right]\), where \(n_\text{in}\) and \(n_\text{out}\) are the number of incoming and outgoing weights of a neuron, respectively (if activation function is of type AF_TANH). For details see:

|

| MS_NGUYENWIDROW | Initialize connections according to Nguyen-Widrow scheme. For details see:

|

| MS_PRECONDITIONOUTPUT | Apply preconditioning to output layer connections. Multiply weights connecting to output neurons with \(\sigma\) and add "mean" to biases. Call modifyConnections with two additional arguments: #modifyConnections(NeuralNetwork::MS_PRECONDITIONOUTPUT, mean, sigma); |

Definition at line 59 of file NeuralNetwork.h.

Constructor & Destructor Documentation

◆ NeuralNetwork()

| NeuralNetwork::NeuralNetwork | ( | int | numLayers, |

| int const *const & | numNeuronsPerLayer, | ||

| ActivationFunction const *const & | activationFunctionsPerLayer ) |

Neural network class constructor.

- Parameters

-

[in] numLayers Total number of layers (including in- and output layer). [in] numNeuronsPerLayer Array with number of neurons per layer. [in] activationFunctionsPerLayer Array with activation function type per layer (note: input layer activation function is is mandatory although it is never used).

Definition at line 30 of file NeuralNetwork.cpp.

References allocateLayer(), biasOffset, biasOnlyOffset, inputLayer, layers, normalizeNeurons, numBiases, numConnections, numHiddenLayers, numLayers, numWeights, outputLayer, and weightOffset.

◆ ~NeuralNetwork()

| NeuralNetwork::~NeuralNetwork | ( | ) |

Definition at line 97 of file NeuralNetwork.cpp.

References biasOffset, biasOnlyOffset, layers, numLayers, and weightOffset.

Member Function Documentation

◆ setNormalizeNeurons()

| void NeuralNetwork::setNormalizeNeurons | ( | bool | normalizeNeurons | ) |

Turn on/off neuron normalization.

- Parameters

-

[in] normalizeNeurons true or false (default: false).

Definition at line 113 of file NeuralNetwork.cpp.

References normalizeNeurons.

◆ getNumNeurons()

| int NeuralNetwork::getNumNeurons | ( | ) | const |

Return total number of neurons.

Includes input and output layer.

Definition at line 120 of file NeuralNetwork.cpp.

References layers, and numLayers.

Referenced by getMemoryUsage().

◆ getNumConnections()

| int NeuralNetwork::getNumConnections | ( | ) | const |

Return total number of connections.

Connections are all weights and biases.

Definition at line 132 of file NeuralNetwork.cpp.

References numConnections.

Referenced by nnp::Training::getWeights(), nnp::Training::randomizeNeuralNetworkWeights(), and nnp::Training::setWeights().

◆ getNumWeights()

| int NeuralNetwork::getNumWeights | ( | ) | const |

◆ getNumBiases()

| int NeuralNetwork::getNumBiases | ( | ) | const |

◆ setConnections()

| void NeuralNetwork::setConnections | ( | double const *const & | connections | ) |

Set neural network weights and biases.

- Parameters

-

[in] connections One-dimensional array with neural network connections in the following order: \[ \underbrace{ \overbrace{ a^{01}_{00}, \ldots, a^{01}_{0m_1}, a^{01}_{10}, \ldots, a^{01}_{1m_1}, \ldots, a^{01}_{n_00}, \ldots, a^{01}_{n_0m_1}, }^{\text{Weights}} \overbrace{ b^{1}_{0}, \ldots, b^{1}_{m_1} }^{\text{Biases}} }_{\text{Layer } 0 \rightarrow 1}, \underbrace{ a^{12}_{00}, \ldots, b^{2}_{m_2} }_{\text{Layer } 1 \rightarrow 2}, \ldots, \underbrace{ a^{p-1,p}_{00}, \ldots, b^{p}_{m_p} }_{\text{Layer } p-1 \rightarrow p} \]

where \(a^{i-1, i}_{jk}\) is the weight connecting neuron \(j\) in layer \(i-1\) to neuron \(k\) in layer \(i\) and \(b^{i}_{k}\) is the bias assigned to neuron \(k\) in layer \(i\).

Definition at line 147 of file NeuralNetwork.cpp.

References layers, and numLayers.

Referenced by initializeConnectionsRandomUniform(), nnp::Training::randomizeNeuralNetworkWeights(), nnp::Mode::readNeuralNetworkWeights(), and nnp::Training::setWeights().

◆ getConnections()

| void NeuralNetwork::getConnections | ( | double * | connections | ) | const |

Get neural network weights and biases.

- Parameters

-

[out] connections One-dimensional array with neural network connections (same order as described in setConnections())

Definition at line 171 of file NeuralNetwork.cpp.

References layers, and numLayers.

Referenced by nnp::Training::getWeights().

◆ initializeConnectionsRandomUniform()

| void NeuralNetwork::initializeConnectionsRandomUniform | ( | unsigned int | seed | ) |

Initialize connections with random numbers.

- Parameters

-

[in] seed Random number generator seed.

Weights are initialized with random values in the \([-1, 1]\) interval. The C standard library rand() function is used.

Definition at line 195 of file NeuralNetwork.cpp.

References numConnections, and setConnections().

◆ modifyConnections() [1/2]

| void NeuralNetwork::modifyConnections | ( | ModificationScheme | modificationScheme | ) |

Change connections according to a given modification scheme.

- Parameters

-

[in] modificationScheme Defines how the connections are modified. See ModificationScheme for possible options.

Definition at line 212 of file NeuralNetwork.cpp.

References AF_TANH, layers, MS_FANIN, MS_GLOROTBENGIO, MS_NGUYENWIDROW, MS_ZEROBIAS, MS_ZEROOUTPUTWEIGHTS, numLayers, and outputLayer.

Referenced by nnp::Training::randomizeNeuralNetworkWeights().

◆ modifyConnections() [2/2]

| void NeuralNetwork::modifyConnections | ( | ModificationScheme | modificationScheme, |

| double | parameter1, | ||

| double | parameter2 ) |

Change connections according to a given modification scheme.

- Parameters

-

[in] modificationScheme Defines how the connections are modified. See ModificationScheme for possible options. [in] parameter1 Additional parameter (see ModificationScheme). [in] parameter2 Additional parameter (see ModificationScheme).

Definition at line 317 of file NeuralNetwork.cpp.

References MS_PRECONDITIONOUTPUT, and outputLayer.

◆ setInput() [1/2]

| void NeuralNetwork::setInput | ( | double const *const & | input | ) | const |

Set neural network input layer node values.

- Parameters

-

[in] input Input layer node values.

Definition at line 357 of file NeuralNetwork.cpp.

References nnp::NeuralNetwork::Neuron::count, inputLayer, nnp::NeuralNetwork::Neuron::max, nnp::NeuralNetwork::Neuron::min, nnp::NeuralNetwork::Neuron::sum, nnp::NeuralNetwork::Neuron::sum2, and nnp::NeuralNetwork::Neuron::value.

Referenced by nnp::Mode::calculateAtomicNeuralNetworks(), nnp::Training::calculateWeightDerivatives(), nnp::Training::calculateWeightDerivatives(), nnp::Training::dPdc(), and nnp::Training::update().

◆ setInput() [2/2]

| void nnp::NeuralNetwork::setInput | ( | std::size_t const | index, |

| double const | value ) const |

Set neural network input layer node values.

- Parameters

-

[in] index Index of neuron to set. [in] value Input layer neuron value.

◆ getOutput()

| void NeuralNetwork::getOutput | ( | double * | output | ) | const |

Get neural network output layer node values.

- Parameters

-

[out] output Output layer node values.

Definition at line 376 of file NeuralNetwork.cpp.

References outputLayer.

Referenced by nnp::Mode::calculateAtomicNeuralNetworks(), nnp::Training::calculateWeightDerivatives(), nnp::Training::calculateWeightDerivatives(), nnp::Training::dPdc(), and nnp::Training::update().

◆ propagate()

| void NeuralNetwork::propagate | ( | ) |

Propagate input information through all layers.

With the input data set by setInput() this will calculate all remaining neuron values, the output in the last layer is acccessible via getOutput().

Definition at line 386 of file NeuralNetwork.cpp.

References layers, numLayers, and propagateLayer().

Referenced by nnp::Mode::calculateAtomicNeuralNetworks(), nnp::Training::calculateWeightDerivatives(), nnp::Training::calculateWeightDerivatives(), nnp::Training::dPdc(), and nnp::Training::update().

◆ calculateDEdG()

| void NeuralNetwork::calculateDEdG | ( | double * | dEdG | ) | const |

Calculate derivative of output neuron with respect to input neurons.

- Parameters

-

[out] dEdG Array containing derivative (length is number of input neurons).

CAUTION: This works only for neural networks with a single output neuron!

Returns \(\left(\frac{dE}{dG_i}\right)_{i=1,\ldots,N}\), where \(E\) is the output neuron and \(\left(G_i\right)_{i=1,\ldots,N}\) are the \(N\) input neurons.

Definition at line 396 of file NeuralNetwork.cpp.

References layers, normalizeNeurons, and numHiddenLayers.

Referenced by nnp::Mode::calculateAtomicNeuralNetworks(), nnp::Training::dPdc(), and nnp::Training::update().

◆ calculateDEdc()

| void NeuralNetwork::calculateDEdc | ( | double * | dEdc | ) | const |

Calculate derivative of output neuron with respect to connections.

- Parameters

-

[out] dEdc Array containing derivative (length is number of connections, see getNumConnections()).

CAUTION: This works only for neural networks with a single output neuron!

Returns \(\left(\frac{dE}{dc_i}\right)_{i=1,\ldots,N}\), where \(E\) is the output neuron and \(\left(c_i\right)_{i=1,\ldots,N}\) are the \(N\) connections (weights and biases) of the neural network. See setConnections() for details on the order of weights an biases.

Definition at line 444 of file NeuralNetwork.cpp.

References biasOffset, layers, normalizeNeurons, numConnections, numLayers, outputLayer, and weightOffset.

Referenced by nnp::Training::calculateWeightDerivatives(), nnp::Training::dPdc(), and nnp::Training::update().

◆ calculateDFdc()

| void NeuralNetwork::calculateDFdc | ( | double * | dFdc, |

| double const *const & | dGdxyz ) const |

Calculate "second" derivative of output with respect to connections.

- Parameters

-

[out] dFdc Array containing derivative (length is number of connections, see getNumConnections()). [in] dGdxyz Array containing derivative of input neurons with respect to coordinates \(\frac{\partial G_j} {\partial x_{l, \gamma}}\).

CAUTION: This works only for neural networks with a single output neuron!

In the context of the neural network potentials this function is used to calculate derivatives of forces (or force contributions) with respect to connections. The force component \(\gamma\) (where \(\gamma\) is one of \(x,y,z\)) of particle \(l\) is

\[ F_{l, \gamma} = - \frac{\partial}{\partial x_{l, \gamma}} \sum_i^{N} E_i = - \sum_i^N \sum_j^M \frac{\partial E_i}{\partial G_j} \frac{\partial G_j}{\partial x_{l, \gamma}}, \]

where \(N\) is the number of particles in the system and \(M\) is the number of symmetry functions (number of input neurons). Hence the derivative of \(F_{l, \gamma}\) with respect to the neural network connection \(c_n\) is

\[ \frac{\partial}{\partial c_n} F_{l, \gamma} = - \sum_i^N \sum_j^M \frac{\partial^2 E_i}{\partial c_n \partial G_j} \frac{\partial G_j}{\partial x_{l, \gamma}}. \]

Thus, with given \(\frac{\partial G_j}{\partial x_{l, \gamma}}\) this function calculates

\[ \sum_j^M \frac{\partial^2 E}{\partial c_n \partial G_j} \frac{\partial G_j}{\partial x_{l, \gamma}} \]

for the current network status and returns it via the output array.

Definition at line 490 of file NeuralNetwork.cpp.

References calculateD2EdGdc(), calculateDEdb(), calculateDxdG(), layers, numBiases, and numConnections.

Referenced by nnp::Training::calculateWeightDerivatives(), nnp::Training::dPdc(), and nnp::Training::update().

◆ writeConnections()

| void NeuralNetwork::writeConnections | ( | std::ofstream & | file | ) | const |

Write connections to file.

- Parameters

-

[in,out] file File stream to write to.

Definition at line 529 of file NeuralNetwork.cpp.

References nnp::appendLinesToFile(), nnp::createFileHeader(), layers, numLayers, and nnp::strpr().

◆ getNeuronStatistics()

| void NeuralNetwork::getNeuronStatistics | ( | long * | count, |

| double * | min, | ||

| double * | max, | ||

| double * | sum, | ||

| double * | sum2 ) const |

Return gathered neuron statistics.

- Parameters

-

[out] count Number of neuron output value calculations. [out] min Minimum neuron value encountered. [out] max Maximum neuron value encountered. [out] sum Sum of all neuron values encountered. [out] sum2 Sum of squares of all neuron values encountered.

CAUTION: This works only for neural networks with a single output neuron!

When neuron values are calculated (e.g. when propagate() is called) statistics about encountered values are automatically gathered. The internal counters can be reset calling resetNeuronStatistics(). Neuron values are ordered layer by layer:

\[ \underbrace{y^0_1, \ldots, y^0_{N_0}}_\text{Input layer}, \underbrace{y^1_1, \ldots, y^1_{N_1}}_{\text{Hidden Layer } 1}, \ldots, \underbrace{y^p_1, \ldots, y^p_{N_p}}_\text{Output layer}, \]

where \(y^m_i\) is neuron \(i\) in layer \(m\) and \(N_m\) is the total number of neurons in this layer.

Definition at line 915 of file NeuralNetwork.cpp.

◆ resetNeuronStatistics()

| void NeuralNetwork::resetNeuronStatistics | ( | ) |

Reset neuron statistics.

Counters and summation variables for neuron statistics are reset.

Definition at line 898 of file NeuralNetwork.cpp.

◆ getMemoryUsage()

| long NeuralNetwork::getMemoryUsage | ( | ) |

Definition at line 981 of file NeuralNetwork.cpp.

References getNumNeurons(), numLayers, and numWeights.

◆ info()

| vector< string > NeuralNetwork::info | ( | ) | const |

Print neural network architecture.

Definition at line 996 of file NeuralNetwork.cpp.

References AF_COS, AF_EXP, AF_GAUSSIAN, AF_HARMONIC, AF_IDENTITY, AF_LOGISTIC, AF_RELU, AF_REVLOGISTIC, AF_SOFTPLUS, AF_TANH, layers, numBiases, numConnections, numLayers, numWeights, and nnp::strpr().

◆ calculateDEdb()

|

private |

Calculate derivative of output neuron with respect to biases.

- Parameters

-

[out] dEdb Array containing derivatives (length is number of biases).

CAUTION: This works only for neural networks with a single output neuron!

Similar to calculateDEdc() but includes only biases. Used internally by calculateDFdc().

Definition at line 593 of file NeuralNetwork.cpp.

References biasOnlyOffset, layers, normalizeNeurons, numLayers, and outputLayer.

Referenced by calculateDFdc().

◆ calculateDxdG()

|

private |

Calculate derivative of neuron values before activation function with respect to input neuron.

- Parameters

-

[in] index Index of input neuron the derivative will be calculated for.

No output, derivatives are internally saved for each neuron in Neuron::dxdG. Used internally by calculateDFdc().

Definition at line 628 of file NeuralNetwork.cpp.

References layers, normalizeNeurons, and numLayers.

Referenced by calculateDFdc().

◆ calculateD2EdGdc()

|

private |

Calculate second derivative of output neuron with respect to input neuron and connections.

- Parameters

-

[in] index Index of input neuron the derivative will be calculated for. [in] dEdb Derivatives of output neuron with respect to biases. See calculateDEdb(). [out] d2EdGdc Array containing the derivatives (ordered as described in setConnections()).

CAUTION: This works only for neural networks with a single output neuron!

Used internally by calculateDFdc().

Definition at line 659 of file NeuralNetwork.cpp.

References biasOffset, biasOnlyOffset, layers, normalizeNeurons, numLayers, outputLayer, and weightOffset.

Referenced by calculateDFdc().

◆ allocateLayer()

|

private |

Allocate a single layer.

- Parameters

-

[in,out] layer Neural network layer to allocate. [in] numNeuronsPrevLayer Number of neurons in the previous layer. [in] numNeurons Number of neurons in this layer. [in] activationFunction Activation function to use for all neurons in this layer.

This function is internally called by the constructor to allocate all neurons layer by layer.

Definition at line 721 of file NeuralNetwork.cpp.

References nnp::NeuralNetwork::Layer::activationFunction, nnp::NeuralNetwork::Neuron::bias, nnp::NeuralNetwork::Neuron::count, nnp::NeuralNetwork::Neuron::d2fdx2, nnp::NeuralNetwork::Neuron::dfdx, nnp::NeuralNetwork::Neuron::dxdG, nnp::NeuralNetwork::Neuron::max, nnp::NeuralNetwork::Neuron::min, nnp::NeuralNetwork::Layer::neurons, nnp::NeuralNetwork::Layer::numNeurons, nnp::NeuralNetwork::Layer::numNeuronsPrevLayer, nnp::NeuralNetwork::Neuron::sum, nnp::NeuralNetwork::Neuron::sum2, nnp::NeuralNetwork::Neuron::value, nnp::NeuralNetwork::Neuron::weights, and nnp::NeuralNetwork::Neuron::x.

Referenced by NeuralNetwork().

◆ propagateLayer()

Propagate information from one layer to the next.

- Parameters

-

[in,out] layer Neuron values in this layer will be calculated. [in] layerPrev Neuron values in this layer will be used as input.

This function is internally looped by propagate().

Definition at line 761 of file NeuralNetwork.cpp.

References nnp::NeuralNetwork::Layer::activationFunction, AF_COS, AF_EXP, AF_GAUSSIAN, AF_HARMONIC, AF_IDENTITY, AF_LOGISTIC, AF_RELU, AF_REVLOGISTIC, AF_SOFTPLUS, AF_TANH, nnp::NeuralNetwork::Neuron::bias, nnp::NeuralNetwork::Neuron::count, nnp::NeuralNetwork::Neuron::d2fdx2, nnp::NeuralNetwork::Neuron::dfdx, EXP_LIMIT, nnp::NeuralNetwork::Neuron::max, nnp::NeuralNetwork::Neuron::min, nnp::NeuralNetwork::Layer::neurons, normalizeNeurons, nnp::NeuralNetwork::Layer::numNeurons, nnp::NeuralNetwork::Layer::numNeuronsPrevLayer, nnp::NeuralNetwork::Neuron::sum, nnp::NeuralNetwork::Neuron::sum2, nnp::NeuralNetwork::Neuron::value, nnp::NeuralNetwork::Neuron::weights, and nnp::NeuralNetwork::Neuron::x.

Referenced by propagate().

Member Data Documentation

◆ normalizeNeurons

|

private |

If neurons are normalized.

Definition at line 407 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdb(), calculateDEdc(), calculateDEdG(), calculateDxdG(), NeuralNetwork(), propagateLayer(), and setNormalizeNeurons().

◆ numWeights

|

private |

Number of NN weights only.

Definition at line 409 of file NeuralNetwork.h.

Referenced by getMemoryUsage(), getNumWeights(), info(), and NeuralNetwork().

◆ numBiases

|

private |

Number of NN biases only.

Definition at line 411 of file NeuralNetwork.h.

Referenced by calculateDFdc(), getNumBiases(), info(), and NeuralNetwork().

◆ numConnections

|

private |

Number of NN connections (weights + biases).

Definition at line 413 of file NeuralNetwork.h.

Referenced by calculateDEdc(), calculateDFdc(), getNumConnections(), info(), initializeConnectionsRandomUniform(), and NeuralNetwork().

◆ numLayers

|

private |

Total number of layers (includes input and output layers).

Definition at line 415 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdb(), calculateDEdc(), calculateDxdG(), getConnections(), getMemoryUsage(), getNeuronStatistics(), getNumNeurons(), info(), modifyConnections(), NeuralNetwork(), propagate(), resetNeuronStatistics(), setConnections(), writeConnections(), and ~NeuralNetwork().

◆ numHiddenLayers

|

private |

Number of hidden layers.

Definition at line 417 of file NeuralNetwork.h.

Referenced by calculateDEdG(), and NeuralNetwork().

◆ weightOffset

|

private |

Offset adress of weights per layer in combined weights+bias array.

Definition at line 419 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdc(), NeuralNetwork(), and ~NeuralNetwork().

◆ biasOffset

|

private |

Offset adress of biases per layer in combined weights+bias array.

Definition at line 421 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdc(), NeuralNetwork(), and ~NeuralNetwork().

◆ biasOnlyOffset

|

private |

Offset adress of biases per layer in bias only array.

Definition at line 423 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdb(), NeuralNetwork(), and ~NeuralNetwork().

◆ inputLayer

|

private |

Pointer to input layer.

Definition at line 425 of file NeuralNetwork.h.

Referenced by NeuralNetwork(), and setInput().

◆ outputLayer

|

private |

Pointer to output layer.

Definition at line 427 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdb(), calculateDEdc(), getOutput(), modifyConnections(), modifyConnections(), and NeuralNetwork().

◆ layers

|

private |

Neural network layers.

Definition at line 429 of file NeuralNetwork.h.

Referenced by calculateD2EdGdc(), calculateDEdb(), calculateDEdc(), calculateDEdG(), calculateDFdc(), calculateDxdG(), getConnections(), getNeuronStatistics(), getNumNeurons(), info(), modifyConnections(), NeuralNetwork(), propagate(), resetNeuronStatistics(), setConnections(), writeConnections(), and ~NeuralNetwork().

The documentation for this class was generated from the following files:

- /home/runner/work/n2p2/n2p2/src/libnnp/NeuralNetwork.h

- /home/runner/work/n2p2/n2p2/src/libnnp/NeuralNetwork.cpp